So, what is xUnit.net? xUnit.net is a unit testing tool for the .NET framework. For example, C# and VB.net. It was created by the original inventor of the NUnit testing framework and seeks to address some of the shortcomings of the NUnit framework in use. It's a free and open source framework, and it's licensed under the Apache Version 2 license.When we create tests with xUnit.net, we can run them with the Visual Studio 2012/2013 Test Runner, we can run them in Resharper, in CodeRush with TestDriven.NET, and we can also execute them from the command line. For updates on xUnit, you can follow xunit@jamesnewkirk or bradwilson on Twitter. And the home of the project is at xunit.codeplex.com.

So, with that background lets get started.

Create a class library project

Let's start by creating a class library project, targeting .NET 4.5 (or later). Open Visual Studio, and choose File > New > Project:

2. Extract and add the 2 dll’s as reference to your class project. Along with that you can also add the Selenium Webdriver if you have already downloaded or you can use the nuget package references for it.

3. Now you can go ahead and write your normal Selenium cases in the Xunit framework using the fixtures of Xunit , using either [Theory] or [Fact] .You can read more about this here

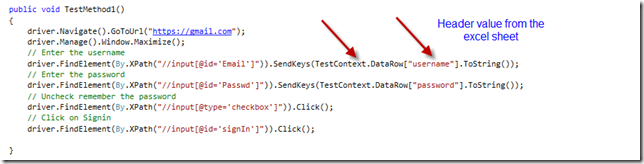

4. So, a simple usercase of gmail login is as follow

4. Now, if we want to make this test as data driven using Excel, we start off with first creating an excel data sheet. Let’s call it as SampleData.xls .

5. Create a named range in the excel sheet for the data. You can create the same by

- Highlight the desired range of cells in the worksheet and right click on it. Choose Define Name

- Type the desired name for that range in the name box, such as TestData

6. Once the named range is defined, we can save that as .xls and not .xlsx ( just ensure that it’s 2003 xls , for some reason, I never got it to work with .xlsx) in the solution folder. Also ensure that the properties of the excel is set to “copy always”

7. Now we need to add a new fixture below the [Theory] , called as [ExcelData] . The query statement implies that we select all data from the named range TestData. So the above code can be changed to data driven as below

8. Now, you can run these tests either using the Visual studio runner or the Resharper. There is another simple way to run the test. If you go to your Xunit folder where you downloaded the binaries from, you can see an exe – “xunit.gui.clr4.x86.exe” . You can launch this app, and point it to your dll from your debug folder and voila , the tests will run. ( Just remember to add the path to your environment variable)

9. In case you run into an error related to JetOLEDB,

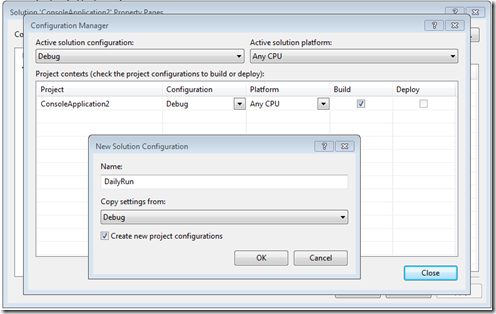

Just change the Property of the project into x86 format

Project---> Properties--->Build--->Target Framework---> x86

TIPS:

Deleting a named range

- Open Microsoft Excel, then click "File" and open the document containing the named range you want to delete.

- Click the "Formulas" tab and click "Name Manager" in the Defined Names group. A window opens that contains a list of all the named ranges in the document.

- Click the name you want to delete. If you want to delete multiple names in a contiguous group, press the "Shift" key while clicking each name. For names in a non-contiguous group, press "Ctrl" and click each name you want to delete.

- Click "Delete," then confirm the deletion by clicking "OK."

Change a Named Range

- Launch Microsoft Excel and open the file containing the name you want to replace.

- Click the "Formulas" tab. Click "Name in Manager" under the Defined Names heading.

- Click the name you want to replace, then click "Edit" in the Name Manager box.

- Enter a new name for the range in the Name box. Change the reference for the name in the Refers To box. Click "OK."

- Change the formula, constant or cell the name represents in the Refers To field in the Name Manager box.

- Click "Commit" to accept the changes.